Effect of Biased Training Data on Loss Landscape of Deep Neural Newworks

[Code] [Report]

Netwrok architecture: Extension to baseline model with the addition of skip connections, encoder-decoder blocks are residual blocks.

Abstract

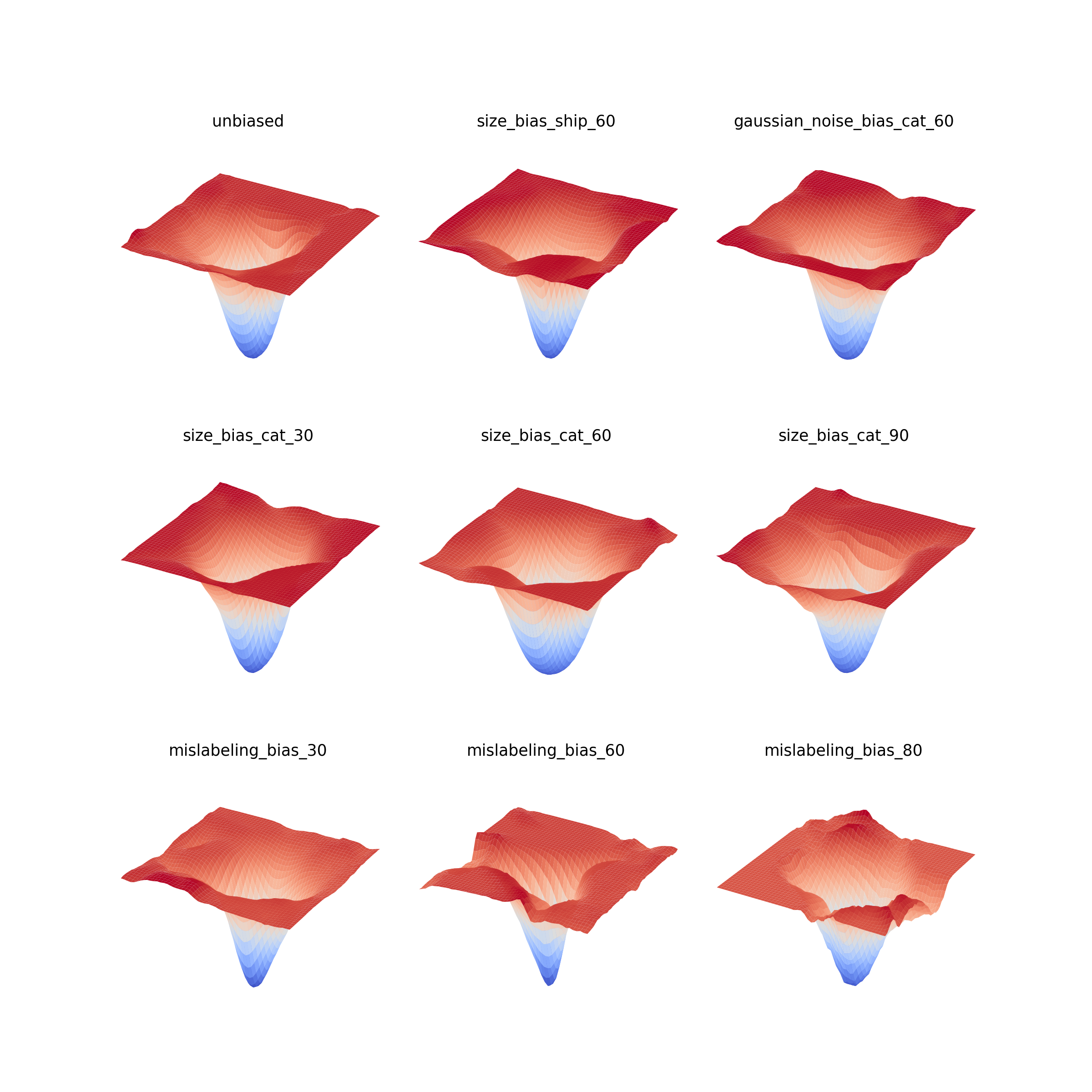

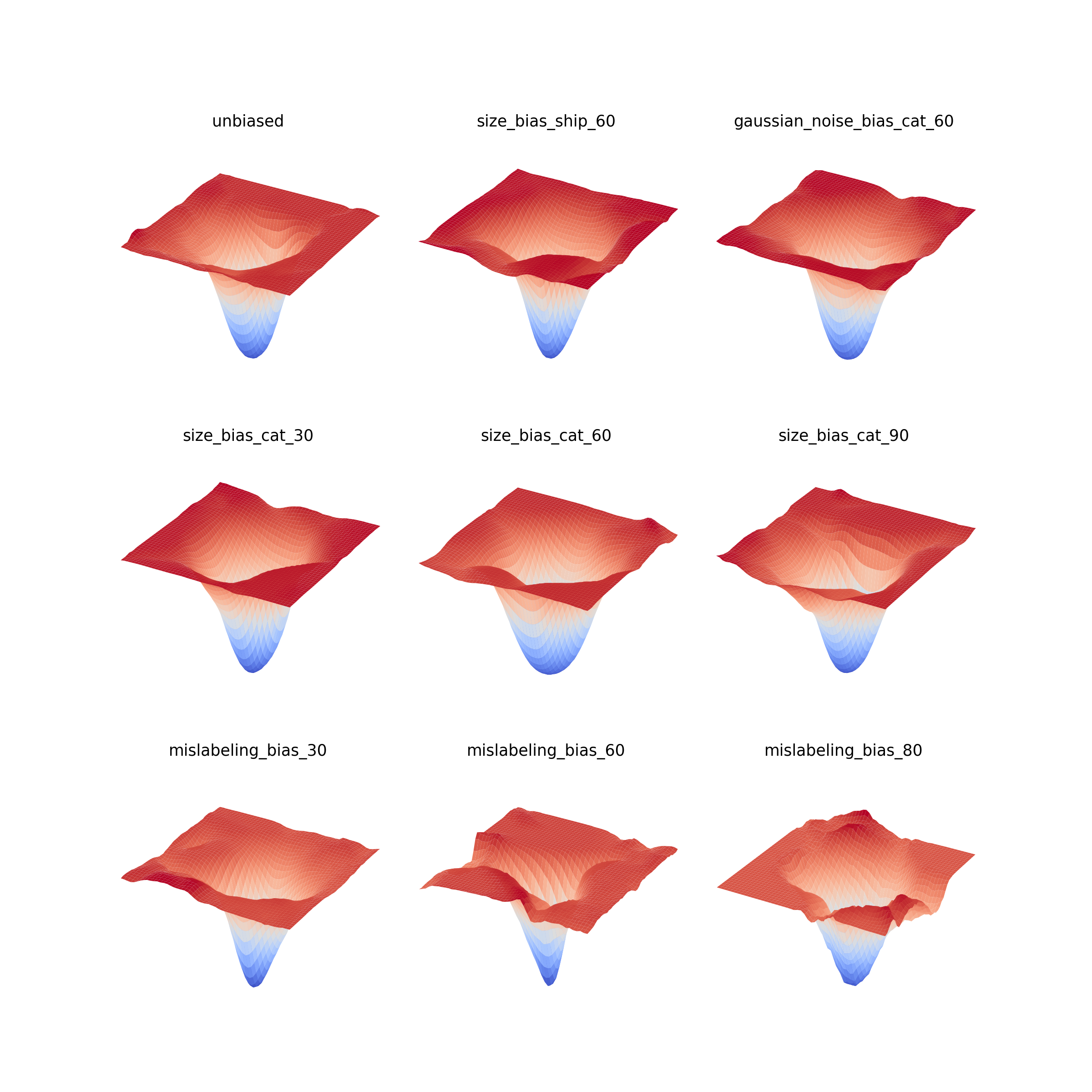

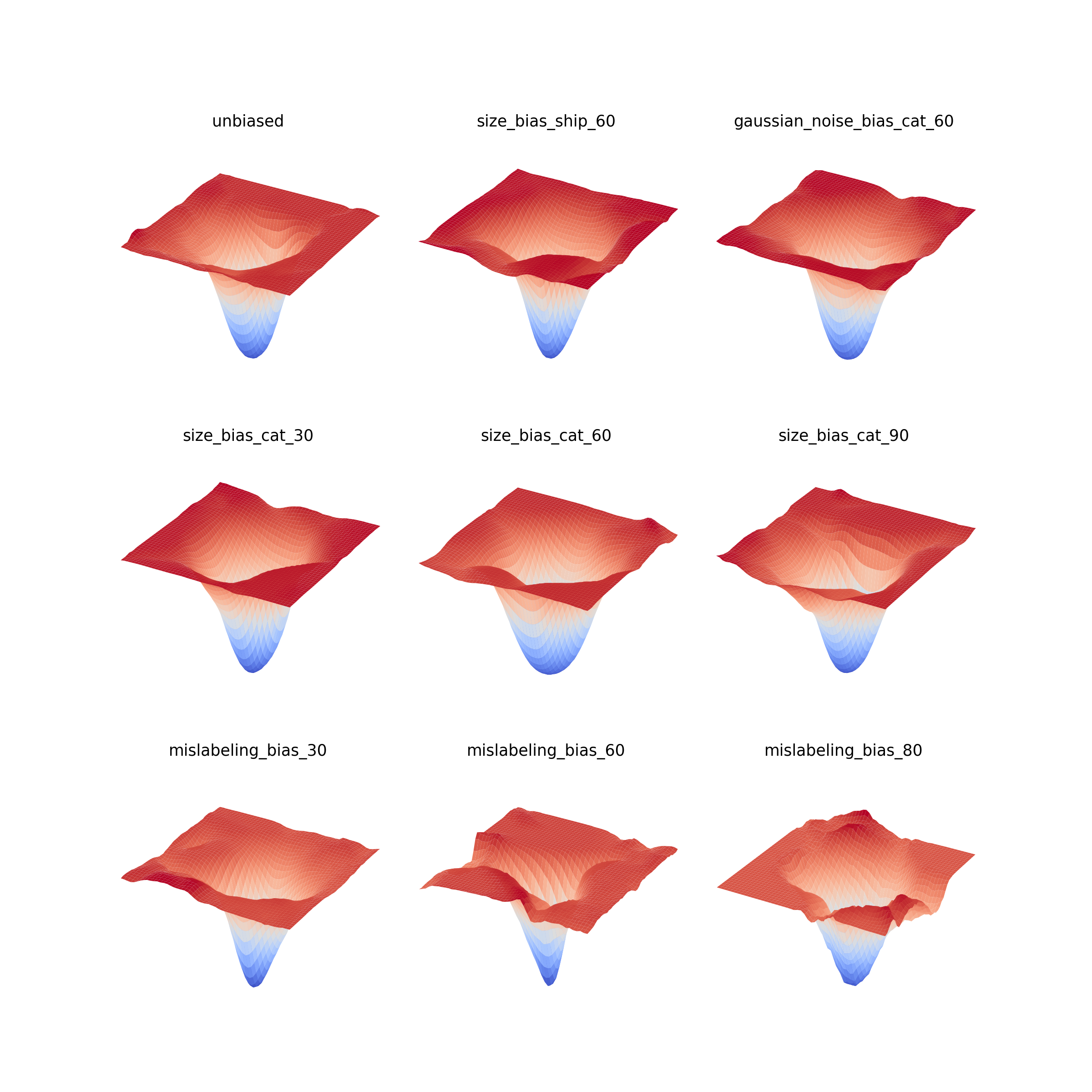

Many studies show a positive correlation between the generalization ability of a deep neural network and the flatness of the minima in its loss landscape. Inspired by this statement, many studies investigate the effect of using different training pa- rameters and network architecture on the loss landscape of the neural network. This study investigates the effect of training a deep neural network on a biased dataset on its loss landscape by visualizing the loss landscape of the trained model. We found that different types of biases in the training dataset can affect the geometry of the loss landscape around the minima.